- #How to install apache spark for scala 2.11.8 on windows archive#

- #How to install apache spark for scala 2.11.8 on windows windows 10#

- #How to install apache spark for scala 2.11.8 on windows code#

- #How to install apache spark for scala 2.11.8 on windows download#

- #How to install apache spark for scala 2.11.8 on windows windows#

Turning to the integration of Maven into Eclipse following the above screenshots:

#How to install apache spark for scala 2.11.8 on windows archive#

We’ll use a light version of Eclipse Luna and then add Manven Eclipse.Įxtract the content of the archive into a directory and start Eclipse. MAVEN_HOME value: D:\apache-maven-3.3.9.

#How to install apache spark for scala 2.11.8 on windows download#

initially starting with Apache Maven 3.3.9 download since and extract eg in D: \ apache-maven-3.3.9Įnsuite ajouter les variables d’environnements suivantes : To use the Spark API in Java we’ll choose as Eclipse IDE with Maven.

#How to install apache spark for scala 2.11.8 on windows windows#

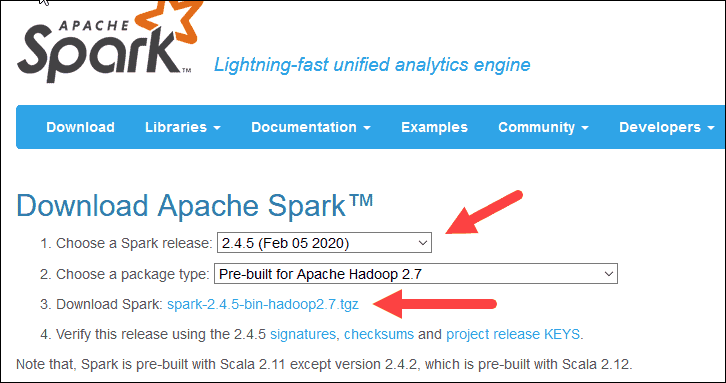

Then download Windows Utilities from the Github repo and paste it in D: \ spark \ spark-2.0.1-bin-hadoop2.7 \ bin. add theses environment variable system. Unzip the file to a local directory, such as D: \ Spark. I myself have downloaded Spark for Hadoop 2.7 and the file name is spark-2.0.1-bin-hadoop2.7.tgz. You can also select a specific version based on a version of Hadoop. The most recent version at the time of this writing is 2.0.1. Variable: système PATH Value: C: \ Program Files (x86) \ scala \ binĭownload the latest version from the Spark website.Variable: SCALA_HOME Value: C: \ Program Files (x86) \ scala.If the installation is correct, this command should display the version of Java installed.Īdd the JAVA_HOME variable in the system environment variables with value: C: \ Program Files \ Java \ jdk1.7.xĪdd in the variable PATH system environment value: C: \ Program Files \ Java \ jdk1.7.x \ binĭefine environmental variables following system: Check the installation from the bin directory under the JDK 1.7 directory by typing java -version. Si l’installation est correcte, cette commande doit afficher la version de Java installée.ĭownload the JDK from Oracle’s site, version 1.7 is recommended. Vérifiez l’installation depuis le répertoire bin sous le répertoire JDK 1.7 en tapant la commande java -version. Téléchargez le JDK depuis le site d’Oracle, la version 1.8 est recommandée. If you use a different operating system, you have to adapt the system variables and the paths to the directories in your environment.

Note : These instructions apply to Windows. You will need the Java Development Kit (JDK) for Spark works locally.If that’s not enough, Google is your friend. Do not worry if you do not know what language we will use only very simple features of Scala, and basic knowledge of functional languages is all you need.

#How to install apache spark for scala 2.11.8 on windows code#

The code for this lab will be done in Java and Scala, which for what we will do is much lighter than Java.

#How to install apache spark for scala 2.11.8 on windows windows 10#

Welcome, we will discover in this tutorial the Spark environment and the installation under Windows 10 and we’ll do some testing with Apache Spark to see what makes this Framework and learn to use it.

0 kommentar(er)

0 kommentar(er)